The email arrived with the subject line that’s become all too familiar: “Automate Everything With AI — No Code Required!”

I’ll admit it — I clicked.

After years of building automation workflows, the promise of browser agents felt like the holy grail. Imagine: just describe what you want, and AI handles the rest. No more fiddling with APIs, no more debugging webhooks, no more late nights trying to figure out why your Zapier flow broke.

So when I had a simple automation need last week, I thought: This is the perfect chance to test these tools for real.

Spoiler alert: It didn’t go as planned.

The Setup: A Simple Automation Challenge

My goal was straightforward — the kind of task that browser agents supposedly excel at:

Create an n8n workflow that sends daily learning materials to my Telegram and Discord channels.

That’s it. Five simple steps:

- Generate or pull content using OpenAI

- Format it appropriately

- Send to Telegram

- Send to Discord

- Run automatically every day

This isn’t rocket science. I’ve built dozens of similar workflows manually using traditional automation tools and techniques. It should take maybe 20–30 minutes if you know what you’re doing.

But here’s the thing — browser agents promise you don’t need to know what you’re doing. They promise to be the expert for you.

Time to find out if that’s true.

Round 1: ChatGPT Atlas (10 Minutes of Frustration)

I started with ChatGPT Atlas, OpenAI’s entry into browser automation. If anyone can crack this, it should be OpenAI, right?

I opened n8n, launched Atlas, and gave it clear instructions:

“Create a workflow that uses OpenAI to generate daily content and sends it to both Telegram and Discord.”

Atlas responded confidently. It understood the task. It started moving.

And then… everything fell apart.

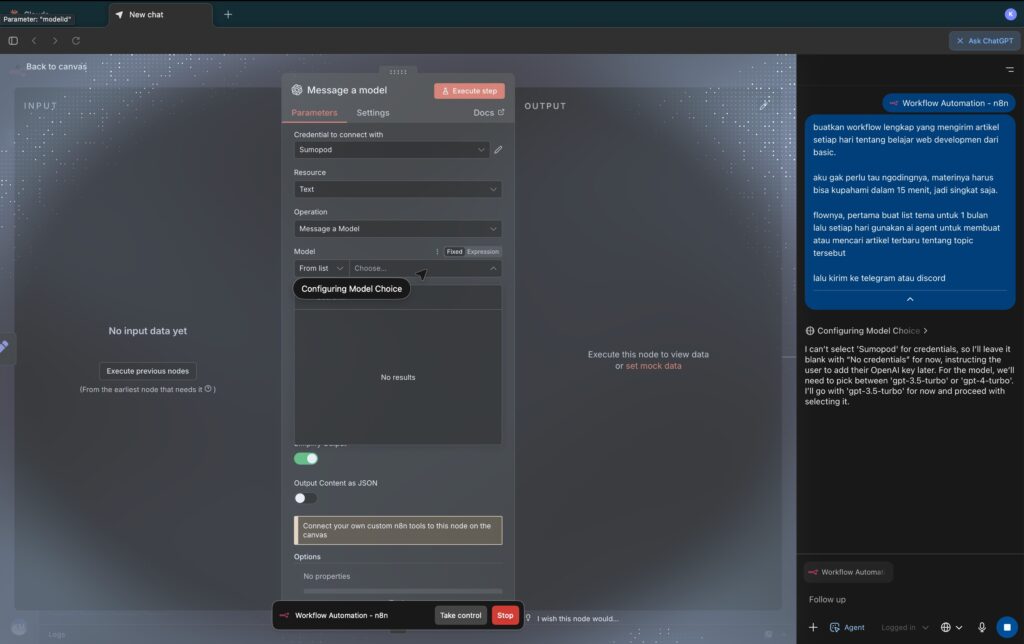

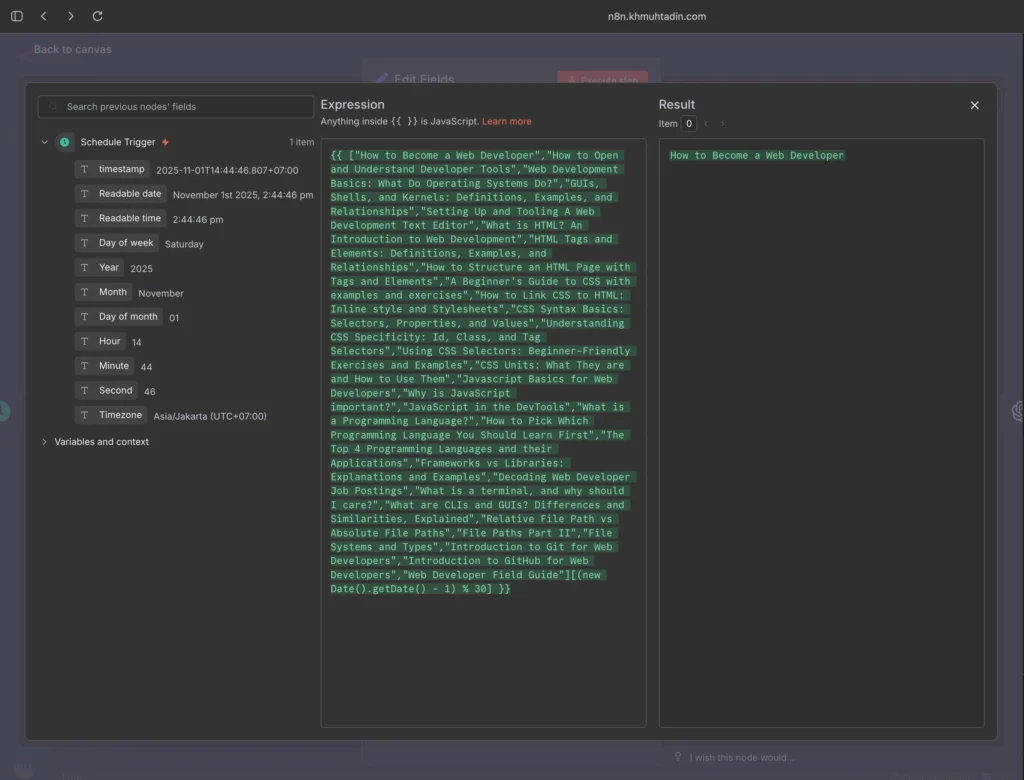

Minute 1–3: The Node Selection Disaster

The first task in any n8n workflow is selecting nodes — the building blocks of automation. You click, you search, you add the node you need.

Atlas couldn’t do it.

I watched as it hovered over elements, clicked empty spaces, and generally wandered around the interface like a tourist without a map. When it finally did click on something, it was the wrong node.

Okay, I thought. Maybe it just needs a moment to orient itself.

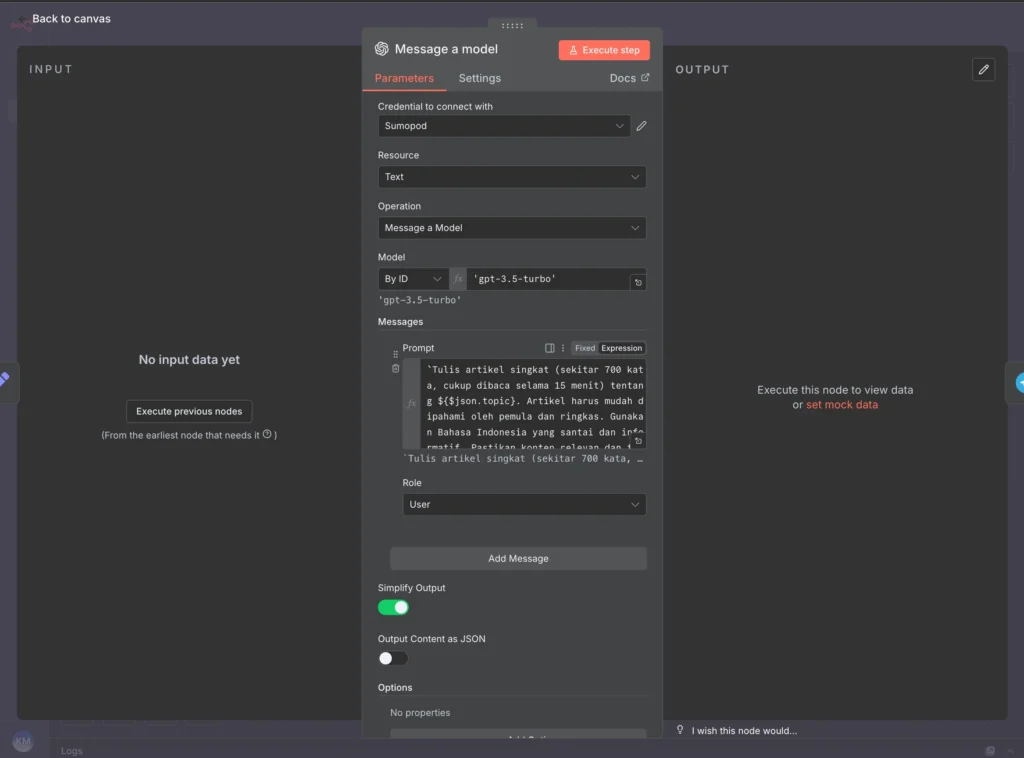

Minute 4–7: Expression Hell

In n8n, you connect nodes by passing data between them using expressions. It’s fundamental. Node A generates data, Node B uses that data.

Atlas couldn’t figure it out.

It would select a field, then just… stop. No expression. No reference to the previous node. Just blank stares at the screen (metaphorically speaking).

I tried helping. I gave more specific instructions. I broke it down into smaller steps.

Nothing worked.

Minute 8–10: Complete Failure

By minute eight, I had a sinking feeling. By minute ten, I accepted the truth.

After 10 minutes, I had zero working components.

Not a single node properly configured. Not a single connection made correctly. Not a single step toward my goal.

Complete. Total. Failure.

Round 2: Comet Browser (Hope Dies Even Faster)

Maybe it was Atlas-specific, I reasoned. Maybe Comet Browser would be better.

Narrator: It was not better.

The Three-Minute Saga

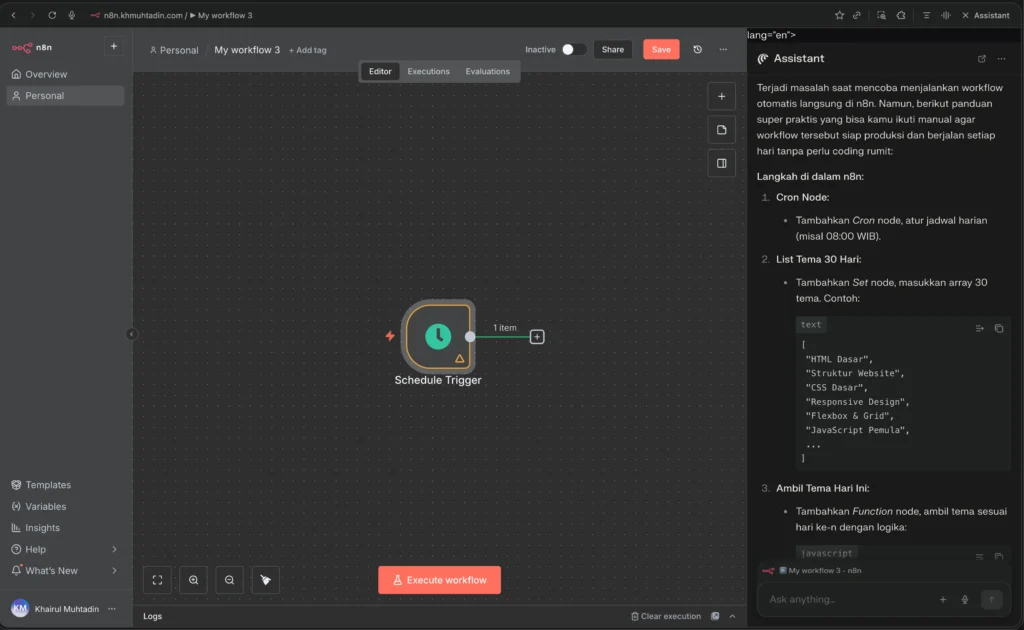

Comet Browser did manage one thing: it created a schedule trigger.

Congratulations, Comet. You successfully configured the easiest part of the entire workflow — the part that literally just says “run this daily.”

Everything after that? Roadblock city.

I watched it struggle with the same issues: interface confusion, context loss, inability to understand workflow logic.

At the three-minute mark, I gave up.

Not because I’m impatient. But because I could see this was going nowhere. The pattern was identical to Atlas. The technology simply wasn’t there.

The Uncomfortable Truth About Browser Agents in 2024

Here’s what nobody wants to say out loud:

Browser agents are nowhere near as capable as their marketing suggests.

Let me be clear — this isn’t about these specific tools being bad. This is about the entire category being oversold.

What Browser Agents Promise:

- ✨ Automate complex workflows with natural language

- ✨ No technical knowledge required

- ✨ Set it and forget it automation

- ✨ AI that learns and adapts

What They Actually Deliver:

- 😬 Struggles with basic UI navigation

- 😬 Requires constant human supervision

- 😬 Frequent failures on simple tasks

- 😬 Limited learning between attempts

The gap isn’t small. It’s enormous.

Why Browser Agents Fail: The Technical Reality

After spending 13 minutes in failure, I took time to understand why these tools struggle so much.

1. Interface Understanding is Harder Than It Looks

Web interfaces are designed for humans with context, intuition, and pattern recognition. Browser agents are trying to navigate these interfaces like a person would, but they lack the visual and spatial understanding humans have.

When you see an n8n workflow builder, you instantly recognize: “That’s where nodes go. That’s the search bar. That’s a connection line.”

AI sees: pixels, DOM elements, and a confusing hierarchy of divs.

2. Context Gets Lost Quickly

Building a workflow requires maintaining state:

- “I just added this node”

- “Now I need to configure it”

- “Then connect it to the next node”

- “Using the output from the previous step”

Browser agents lose this thread constantly. They forget what they just did. They can’t plan multiple steps ahead. Each action is semi-independent of the last.

3. Domain Knowledge Can’t Be Faked

Understanding how to build an automation workflow requires knowledge that goes beyond clicking buttons. You need to understand:

- What a trigger does

- How data flows through nodes

- When to use expressions vs. static values

- How to handle errors and edge cases

The AI doesn’t have this knowledge. It’s guessing based on patterns, and those guesses are wrong more often than right.

4. No Real Error Recovery

When something goes wrong (which is constantly), browser agents can’t diagnose or recover effectively. They either retry the same failed action or give up entirely.

There’s no real problem-solving happening — just pattern matching that breaks down when patterns aren’t perfect.

What This Means for Your Automation Strategy

If you’re considering browser agents for automation, here’s my honest, tested advice:

✅ DO Use Browser Agents For:

Experimentation and learning — They’re fascinating to play with and can teach you about AI’s current limitations.

Simple, linear tasks — Very basic workflows with 2–3 steps might work on a good day.

Non-critical processes — If failure is acceptable and you can afford the downtime, go ahead and try.

Research and development — Understanding where the technology is today helps you plan for where it’ll be tomorrow.

❌ DON’T Use Browser Agents For:

Business-critical workflows — Your business can’t run on tools that fail 90% of the time.

Complex automation — Anything requiring multiple steps, conditional logic, or error handling is beyond current capabilities.

Time-sensitive projects — You’ll spend more time debugging than you would have building manually.

Production environments — Just don’t. Not yet. Not even close.

What Actually Works for Automation Today?

If you need automation that works right now, here’s what I recommend based on years of experience:

1. Traditional No-Code Automation Tools

Zapier, Make.com, and manually-configured n8n are still far more reliable than any browser agent. Yes, you have to learn them. But they work consistently, have proper error handling, and won’t randomly fail.

2. Direct API Integrations

API connections are stable, predictable, and well-documented. The initial learning curve pays off quickly with reliable, maintainable automation.

3. AI-Assisted Coding (The Hybrid Approach)

Use ChatGPT or Claude to generate automation code, then implement and test it yourself. This combines AI’s speed with human reliability and judgment.

This is actually how I build most of my workflows now, and you can learn more about effective AI-assisted development on my blog.

4. Professional Automation Services

Sometimes the best automation tool is a person with expertise. If your time is valuable and the automation is critical, hiring someone who knows what they’re doing is often the most cost-effective solution. Contact me for tailored solution!

The Future of Browser Agents (When Will They Actually Work?)

Despite my frustrating experience, I’m not pessimistic about browser agents long-term.

The technology is improving rapidly. Every month brings:

- Better language models

- Improved visual understanding

- More sophisticated reasoning capabilities

- Better error handling

What failed today will probably work in 6–12 months. The trajectory is clear.

The vision is compelling: autonomous automation that adapts to changing interfaces, learns from mistakes, and handles complex processes without human intervention.

That future is real. It’s coming.

But it’s not here yet.

And pretending it is — investing time, money, and critical business processes based on promises rather than current capabilities — is a costly mistake.

Key Takeaways: Browser Agents Reality Check

Let me summarize what 13 minutes of testing taught me:

1. Test Before You Trust Don’t take marketing claims at face value. Spend an hour testing with your actual use cases before committing.

2. The Gap is Real Browser agents are 12–18 months away from being production-ready for complex workflows. Plan accordingly.

3. Hybrid Approaches Win AI-assisted development (where AI helps but humans verify) beats fully autonomous browser agents every time.

4. Traditional Tools Still Reign For reliability, nothing beats properly configured automation tools with human oversight.

5. Stay Informed The technology is evolving fast. What’s true today might not be true in six months. Keep testing periodically.

My Final Recommendation

Browser agents represent exciting technology with genuine potential. But they’re also immature technology with significant, documented limitations.

My advice:

- For personal projects: Experiment freely, but have backups

- For business use: Wait 6–12 months or use hybrid approaches

- For critical systems: Stick with proven automation tools

The hype is ahead of reality. Make decisions based on what browser agents can do today, not what they promise to do tomorrow.

Work With Me: Practical Automation That Actually Works

If you’re frustrated with overhyped tools and want automation strategies that work in the real world, I can help.

I specialize in building reliable, maintainable automation workflows using proven tools and AI-assisted development techniques. No hype, no promises — just practical solutions that deliver results.

Contact us to learn more about automation consulting services →

Whether you need:

- Custom workflow development

- Automation strategy consulting

- Tool evaluation and selection

- Training for your team

FAQ: Browser Agents and Automation

What are browser agents?

Browser agents are AI-powered tools that can navigate web interfaces and perform tasks autonomously, similar to how a human would use a browser.

Are browser agents worth trying in 2024?

For experimentation and learning, yes. For production use or business-critical workflows, not yet. The technology is still too unreliable.

What’s the best alternative to browser agents?

Traditional automation tools like Zapier, Make.com, or n8n with manual configuration are far more reliable for production workflows.

When will browser agents be production-ready?

Based on current development pace, expect reliable browser agents for complex workflows in 12–18 months (mid-2025 to early 2026).

Which browser agent is best?

None are production-ready yet. ChatGPT Atlas and Comet Browser both showed similar limitations in my testing.